Last Updated on September, 2024

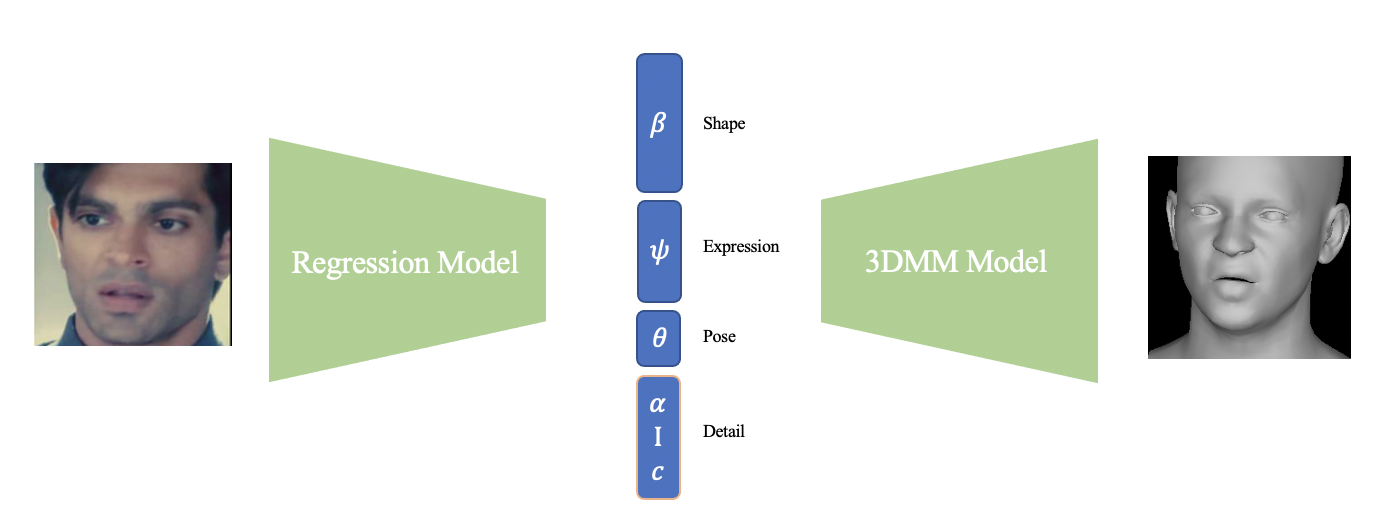

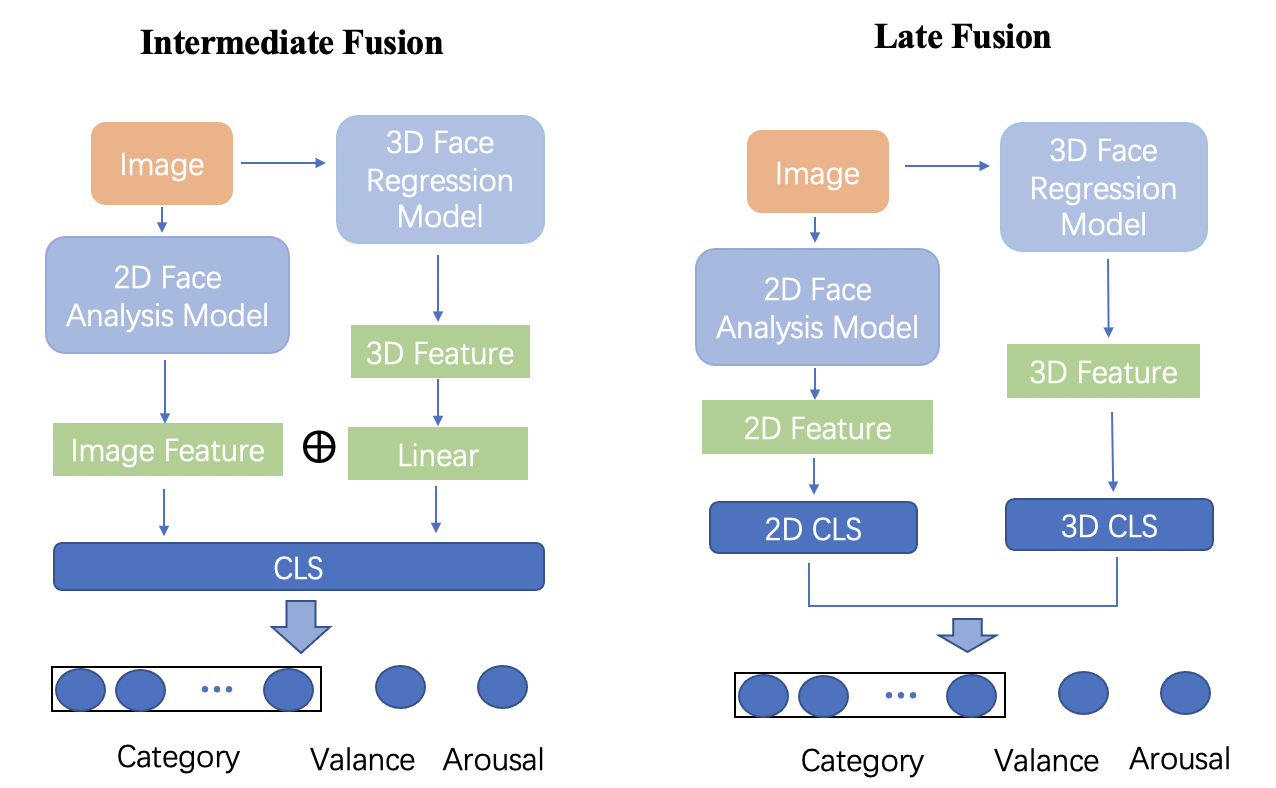

Reconstructing 3D faces with facial geometry from single images has allowed for major advances in animation, generative models, and virtual reality. However, this ability to represent faces with their 3D features is not as fully explored by the facial expression inference (FEI) community. This study therefore aims to investigate the impacts of integrating such 3D representations into the FEI task, specifically for facial expression classification and face-based valence-arousal (VA) estimation. To accomplish this, we first assess the performance of two 3D face representations (both based on the 3D morphable model, FLAME) for the FEI tasks. We further explore two fusion architectures, intermediate fusion and late fusion, for integrating the 3D face representations with existing 2D inference frameworks. To evaluate our proposed architecture, we extract the corresponding 3D representations and perform extensive tests on the AffectNet and RAF-DB datasets. Our experimental results demonstrate that our proposed method outperforms the state-of-the-art AffectNet VA estimation and RAF-DB classification tasks. Moreover, our method can act as a complement to other existing methods to boost performance in many emotion inference tasks.

@article{dong2024ig3d,

title={Ig3D: Integrating 3D Face Representations in Facial Expression Inference},

author={Dong, Lu and Wang, Xiao and Setlur, Srirangaraj and Govindaraju, Venu and Nwogu, Ifeoma},

journal={arXiv preprint arXiv:2408.16907},

year={2024}

}

|

Last Updated on September, 2024

|